The Problem

We all have those moments: someone shares a 45-minute YouTube video, and you want to know if it's worth your time. I wanted a quick way to get summaries of YouTube videos without manually watching them or copy-pasting URLs into various tools.

The solution? A personal Telegram bot that instantly summarizes any YouTube video I send it.

What I Built

I created an n8n workflow that:

- Receives YouTube links via a Telegram bot

- Extracts video transcripts using a custom Flask API

- Sends the transcript to Azure OpenAI (GPT-4o-mini) for summarization

- Returns a concise 3-5 point summary back to Telegram

Important: I added authentication to ensure only I can use the bot—this is a personal learning project, not a public service, and I wanted to avoid unexpected API costs.

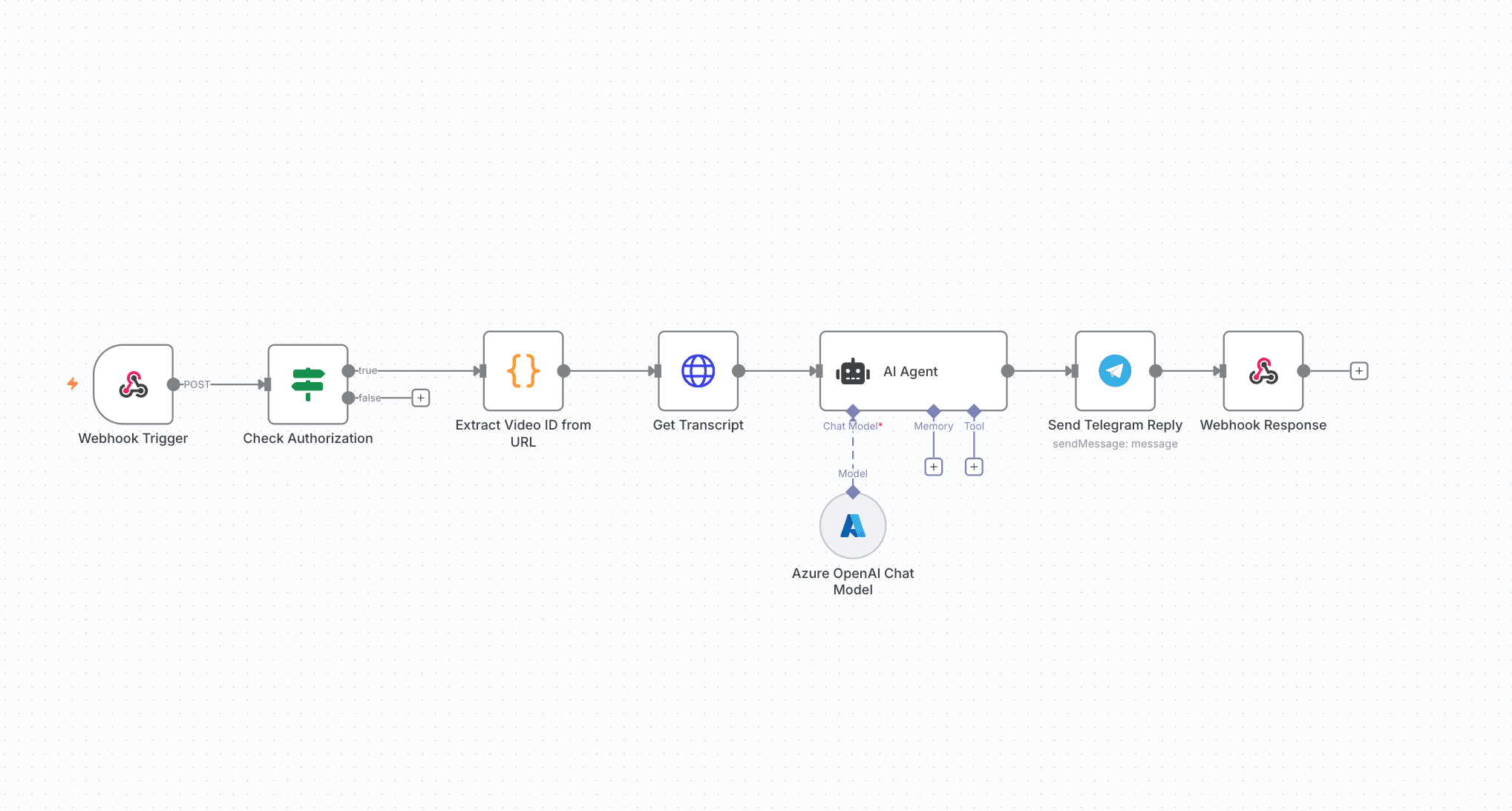

The Architecture

Components

Telegram Bot: The user interface. I send YouTube links here and receive summaries.

n8n Workflow: The orchestration layer connecting all services.

Custom YouTube Transcript API: A Flask API using yt-dlp to extract video transcripts, running in Docker.

Azure OpenAI: GPT-4o-mini model for generating intelligent summaries.

The Workflow Flow

Here's how the workflow operates:

- Webhook Trigger: Receives POST requests from Telegram when I send a message

- Authentication Check: Validates that the message comes from my Telegram chat ID

- Video ID Extraction: JavaScript code extracts the YouTube video ID from various URL formats

- Transcript Fetching: HTTP request to my local transcript API at

http://youtube-transcript-api:5000/transcript - AI Summarization: Azure OpenAI processes the transcript and generates 3-5 key points

- Telegram Reply: Sends the formatted summary back to me

- Webhook Response: Acknowledges receipt to Telegram

Key Implementation Details

Authentication

The most critical part was limiting access to just me. I used an n8n "If" node that checks two conditions:

- Message text exists (it's not an image or sticker)

- Chat ID equals my personal Telegram ID

// Conditions in n8n$json.body.message.text (exists)$json.body.message.chat.id (equals YOUR_CHAT_ID)

If either condition fails, the workflow stops—no transcript is fetched, no API calls are made, no costs incurred.

Handling YouTube URLs

YouTube URLs come in many formats: youtube.com/watch?v=, youtu.be/, embeds, etc. I wrote a JavaScript code node to extract video IDs reliably:

const url = $input.first().json.body.message.text;const videoIdMatch = url.match(/(?:youtu\.be\/|youtube\.com\/watch\?v=|youtube\.com\/embed\/|youtube\.com\/v\/)([a-zA-Z0-9_-]{11})/);const videoId = videoIdMatch ? videoIdMatch[1] : null;

The Transcript API

I built a simple Flask API that wraps yt-dlp for transcript extraction. It runs in Docker and connects to n8n via a shared Docker network:

networks:n8n_n8n-network:external: true

This allows n8n to call it at http://youtube-transcript-api:5000 without exposing ports externally.

Pro tip: YouTube occasionally shows "Sign in to confirm you're not a bot" errors. The solution? Export your browser cookies using a browser extension and mount them into the Docker container.

Azure OpenAI Integration

I used n8n's Azure OpenAI Chat Model node with GPT-4o-mini. The prompt is straightforward:

System: You are a helpful assistant that summarizes YouTube videos.User: Summarize this YouTube transcript in 3-5 key points. Transcript:{{ $json.full_text }}

The model responds with a clean, formatted summary that gets sent directly to Telegram.

What I Learned

n8n is Powerful but Requires Attention to Detail

- Referencing data between nodes using expressions like

={{ $('Webhook Trigger').item.json.body.message.chat.id }}takes practice - The visual workflow makes debugging much easier than code-only solutions

Docker Networking Matters

Getting the transcript API and n8n to communicate required understanding Docker networks. Once I added them to the same network, everything clicked.

Authentication is Essential

Even for personal projects, adding a simple chat ID check prevents accidental usage and runaway costs.

AI Models are Surprisingly Good at Summarization

GPT-4o-mini consistently produces high-quality summaries that capture the essence of videos without hallucinating content.

Running Costs

This is effectively free for my usage:

- n8n: Self-hosted, no cost

- Azure OpenAI: Pay-per-token

- Telegram: Free

- Docker: Local resources

With authentication in place, I control exactly when the AI is called.

Future Improvements

Some ideas I'm considering:

- Add support for videos without transcripts using Whisper

- Store summaries in a database for future reference

- Add commands like

/summaryvs/detailedfor different summary lengths - Support playlist summarization

Try It Yourself

The full code is available on GitHub. You'll need:

- n8n instance (Docker recommended)

- Telegram bot token

- Azure OpenAI API key

- Docker for the transcript API

Import the workflow JSON, set up your credentials, update the chat ID to yours, and you're good to go!

Want a quick overview of this project? Check it out in my portfolio →

Conclusion

This project was an excellent way to dive deeper into n8n's capabilities and learn about integrating various APIs and AI models. The real win was building something genuinely useful for my daily workflow—I now use this bot multiple times a week.

If you're learning n8n or exploring AI integrations, I highly recommend building something similar. The hands-on experience of connecting real services is invaluable.

This is a demo project built for learning purposes. The authentication ensures it remains a personal tool rather than a public service.